PassiveLogic is Transforming AI's Energy Efficiency

AI is the defining technology of this decade, but it comes with steep energy costs. The energy required to power AI’s computational infrastructure continues to grow exponentially. The International Energy Association's Electricity 2024 report forecasts global data center electricity consumption will double between 2022 and 2026, with AI playing a significant role in that growth. It is expected to reach 1,000 TWh by 2026—equivalent to the annual energy consumption of Japan. This unsustainable trajectory threatens both technological progress and our planet.

The energy intensiveness of today’s leading AI models is a roadblock to innovation. It limits AI's potential in areas like robotics, autonomous vehicles, and edge computing, and it inhibits AI’s ability to get smarter during runtime. In addition, today’s AI development is artificially bifurcated between back-room training and run-time inferencing. Because AI models cannot learn in real time at the edge, the customer applications they power largely remain static. Models wait for infrequent updates from a remote lab full of engineers, forsaking crowdsourced insights and wasting time that could be spent training applications.

Deep Learning is Built on Differentiable Computing

The foundation of modern AI is differentiable computing—the ability for code to be computed in reverse. This kind of compute was the seminal breakthrough that enabled deep learning to emerge from decades of AI’s failure to scale.

Neural networks have existed since the 1950s but were historically limited to very small problem sets due to the compute time required to solve anything more than the most trivial AI models. Every computer science method for solving these models took exponentially greater amounts of time with each additional parameter in play. Differentiable computing solves this, speeding up training by six to eight orders of magnitude. Training allows AI applications to learn, the key feature of deep learning.

Differentiable computing has always been a bigger story than homogeneous tensor models, yet it’s remained restricted to niche use cases, trapped by the deep learning frameworks employing the method. The world of computer science is vast and, unfortunately, underutilized. There is massive, unexplored potential for AI, and we’re just getting started.

New Frontiers in Deep Learning

The industry needs a new approach to deep learning. Our team began with the question: "What if any code, no matter its shape, could be trained like simple tensor models? And what if it was also blazing fast and energy efficient?” PassiveLogic has been building this new compiler solution in the Swift language and leading improvements for the autodiff features in the Swift toolchain, coining our efforts as “Differentiable Swift”.

Our work in Differentiable Swift focuses on solving these four questions:

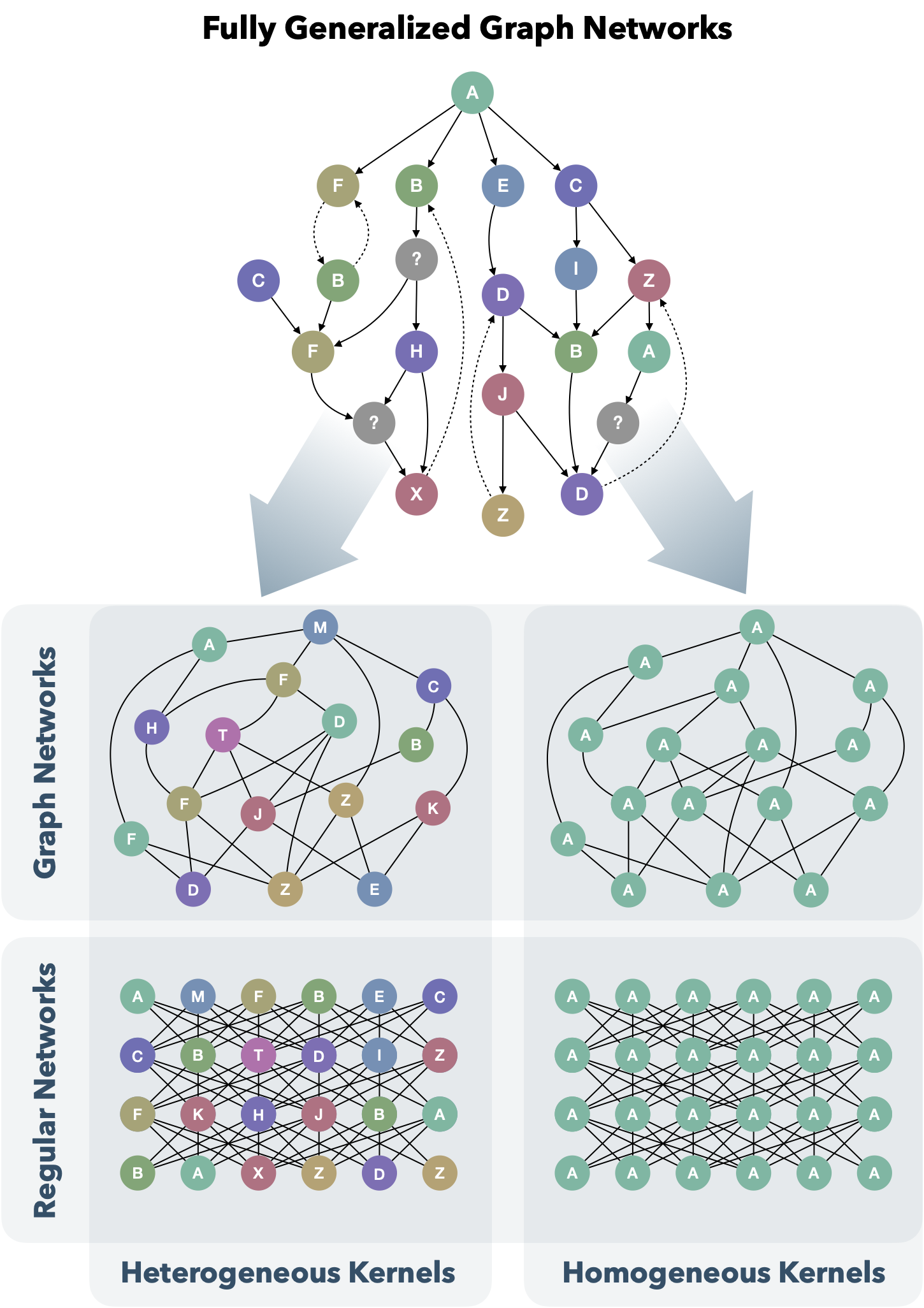

- What if we could enable heterogeneous, fully generalized graph networks—no matter how complex or general purpose?

- What if AI and application code could merge into a single performant systems language?

- What if autodiff wasn’t just for training, but fast enough for inferencing models in arbitrary dimensions?

- What if model learning needed so little energy that it could happen at the edge, right in runtime battery-powered applications like robotics?

Image credit: Troy Harvey

PassiveLogic’s extensive work on Differentiable Swift is expanding applications for novel AI models, moving beyond the current deep learning orthodoxy. These contributions open doors to countless new AI methodologies where experimentation was previously difficult—with uses in everything from physics, economics, and philosophy to engineering, autonomous systems, and user experience. While current deep learning has enabled a very specific approach to AI, there is so much more to explore.

Today, AI and a climate-positive future seem incompatible. However, by developing more efficient and innovative models, we can shift this dynamic into a positive feedback cycle where AI helps us overcome these intractable challenges.

Our latest compiler optimizations achieve record-breaking energy efficiency, demonstrating PassiveLogic’s commitment to building AI for good.

Redefining Efficiency: The Data Speaks for Itself

To understand how Differentiable Swift performs relative to other frameworks, we compared our recently optimized toolchain to industry standards TensorFlow (Google) and PyTorch (Meta). Because Differentiable Swift is not opinionated about the form of computation, it can performantly handle heterogeneous, scalar calculations. Popular frameworks’ narrow focus on massive homogeneous neural network matrix multiplications inhibits their ability to execute AI calculations that do not perfectly fit their deep-learning paradigm.

While Differentiable Swift returned faster-than-ever compute speeds, the results of our optimizations also showed a jaw-dropping decrease in energy use. These latest improvements in Differentiable Swift have set a new, and incredibly exciting, energy efficiency precedent.

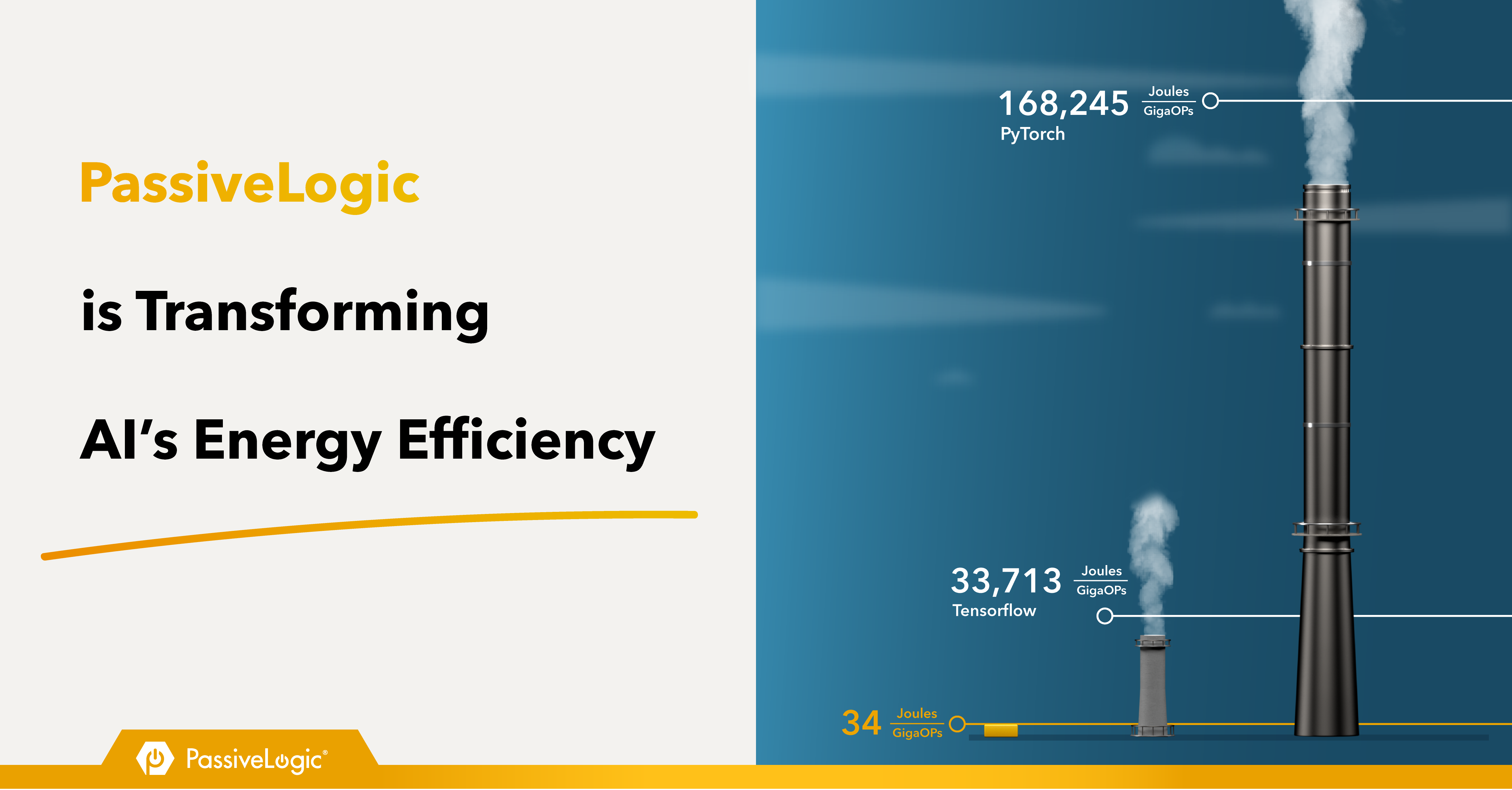

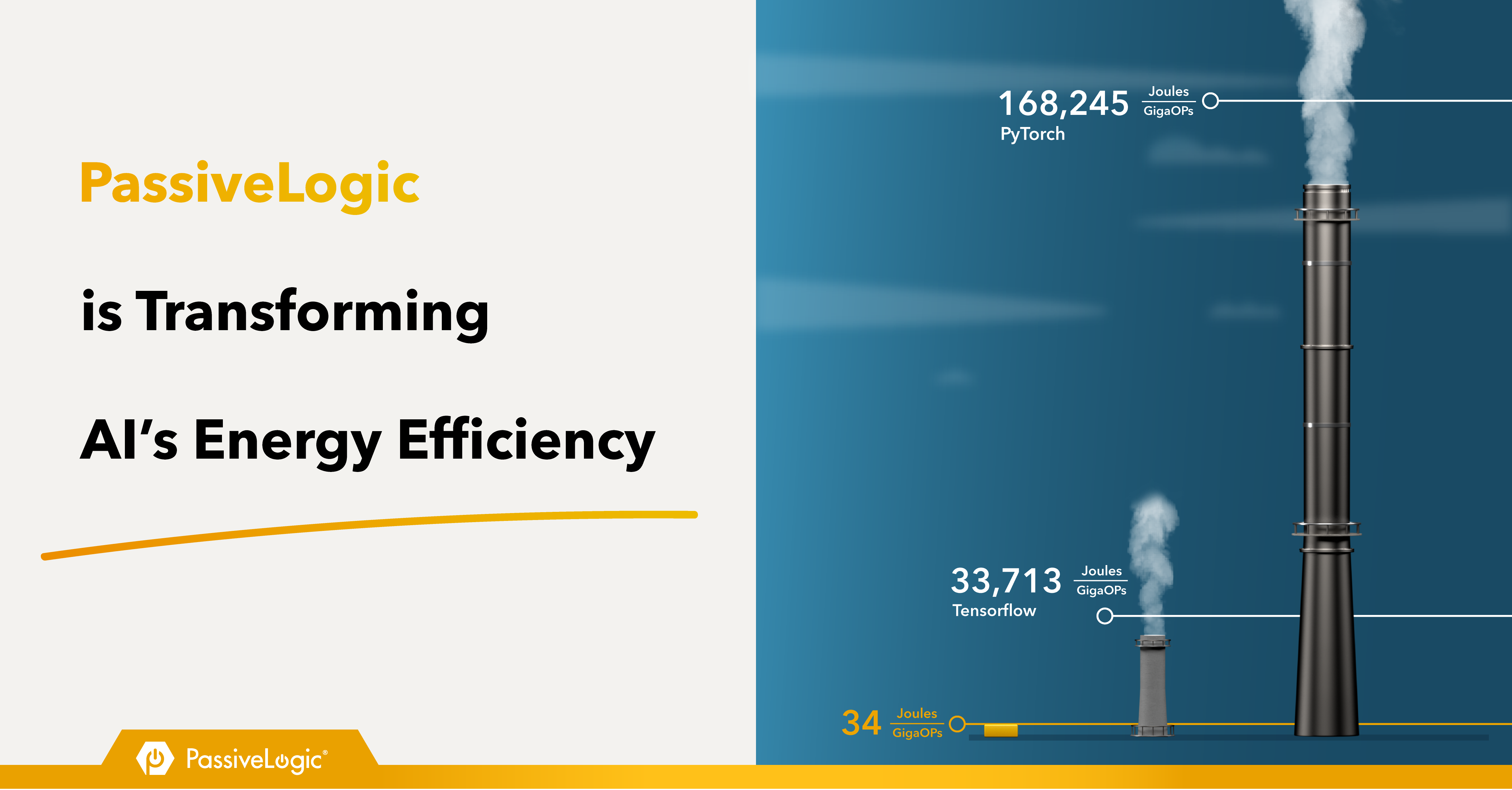

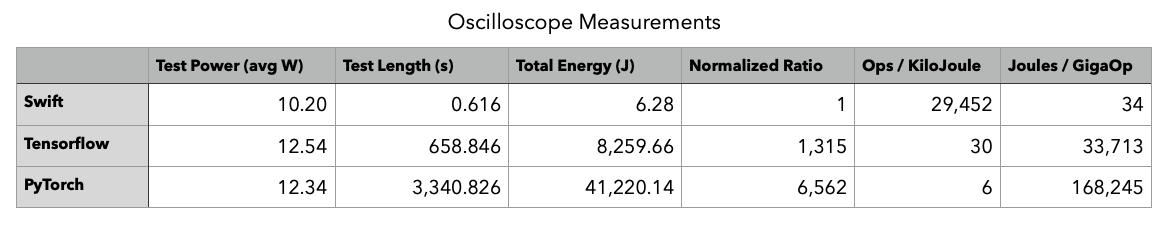

When comparing the amount of energy required to accomplish the same number of computations, Differentiable Swift achieved a staggering 992x energy efficiency improvement over TensorFlow and a 4,948x energy efficiency improvement over PyTorch. This means more than a 99% energy efficiency improvement.

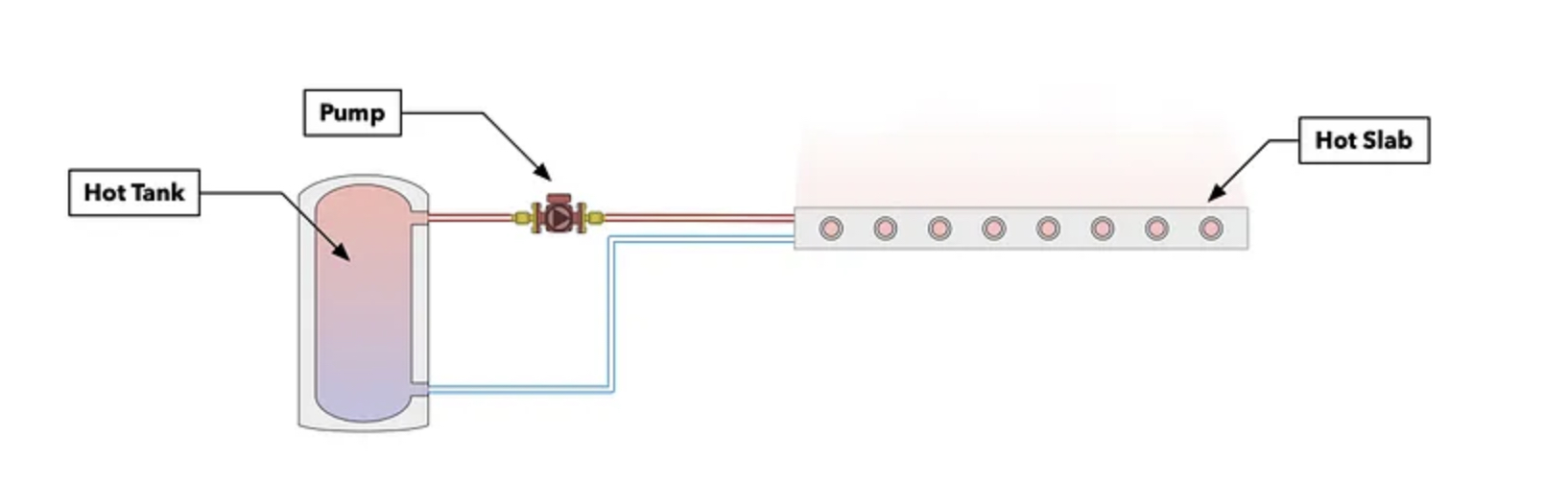

The benchmark utilizes physics, something core to PassiveLogic’s digital twin technology. Yet the test’s structure applies to almost any code you can write; it all boils down to a for-loop and algebra. In this particular test, we chose a thermodynamics-based equipment simulation of heat transfer through a concrete slab in a building. While this is a concrete model, the paradigm extends to abstract models like graph networks, neural nets, and the like. We will be publishing other benchmarks over the coming months.

Image description: A schematic of the equipment system we are modeling for this benchmark, including a tank, a flat radiator slab, and a pipe connecting them.

AI efficiency is measured by the amount of energy consumed per compute operation. Here, it’s denoted in Joules per gigaOperations (J/GOps). We chose gigaOperations as the label because our work in Differentiable Swift is meant for general-purpose compute. We’re accounting for all operations, not just floating point operations and matrix multiplications (matmul).

The Methodology

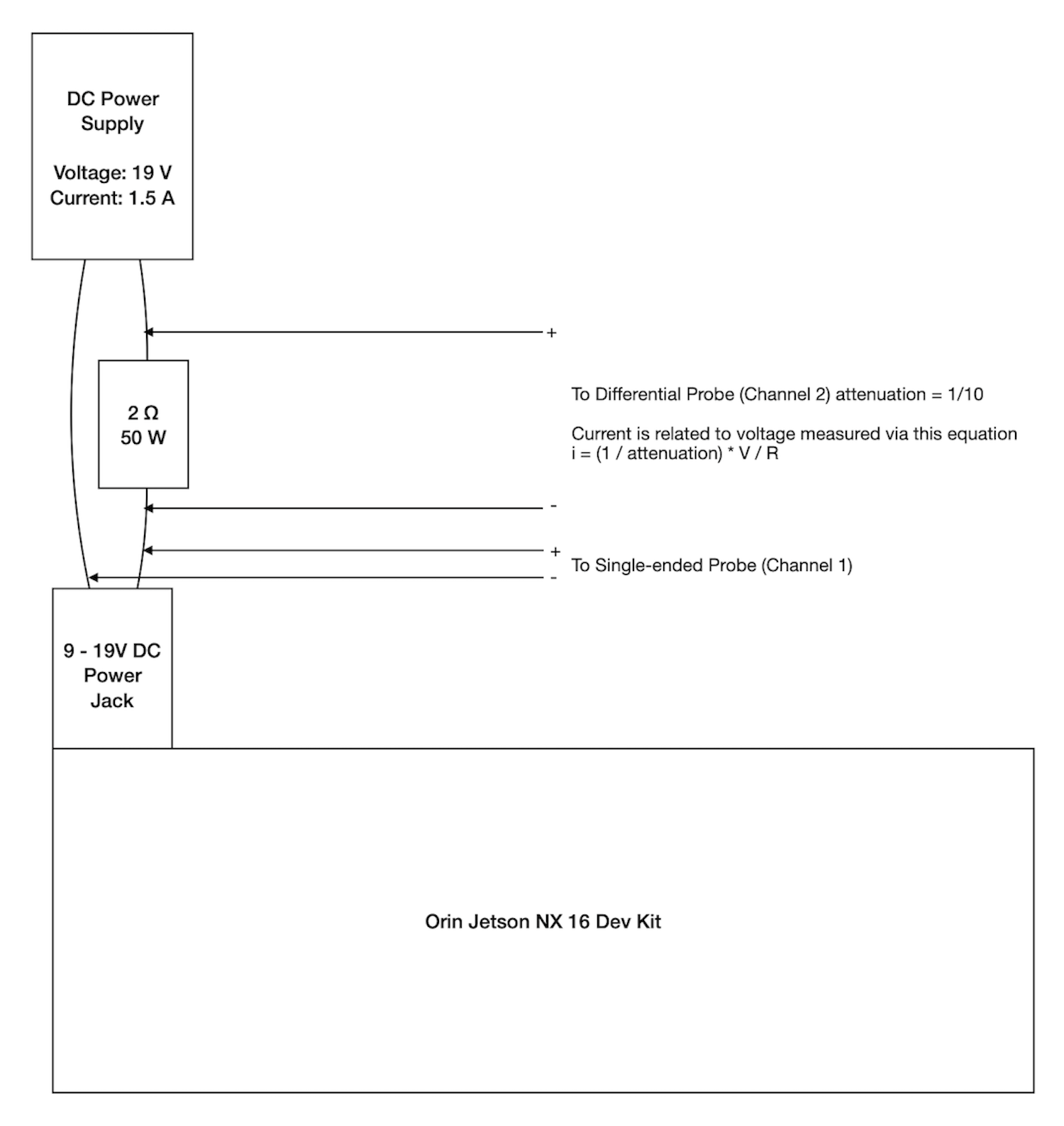

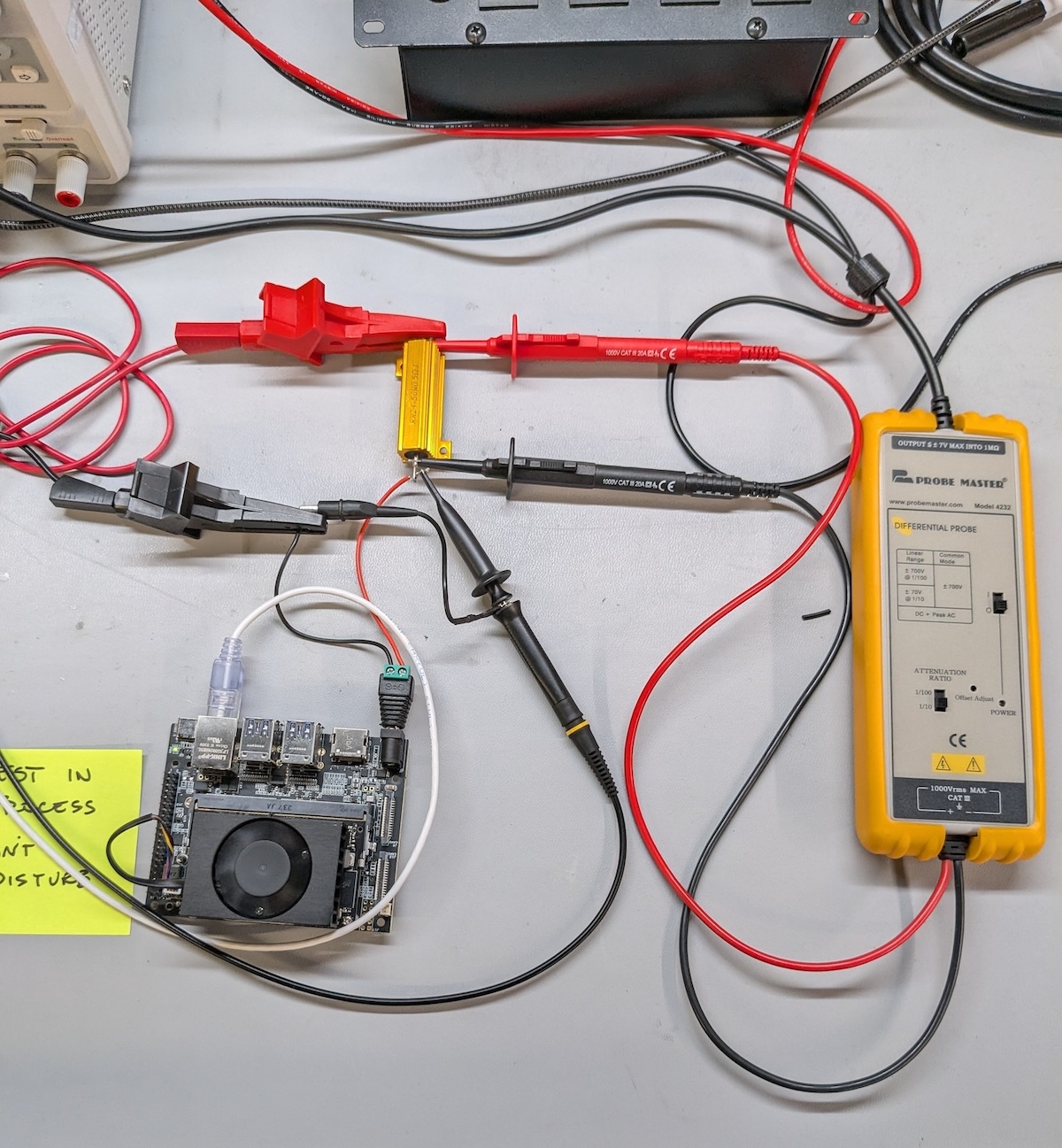

To measure the energy consumed during program execution, we used a current shunt, a differential probe, and an oscilloscope. As seen in the diagram and image below, the current shunt resistor was placed in series with the positive input power terminal on the Jetson Orin. Both voltage and current consumed were captured at high speed using two channels on an oscilloscope. The tests were run on a Jetson Orin NX 16 in MAXN power mode with the Linux performance governor enabled.

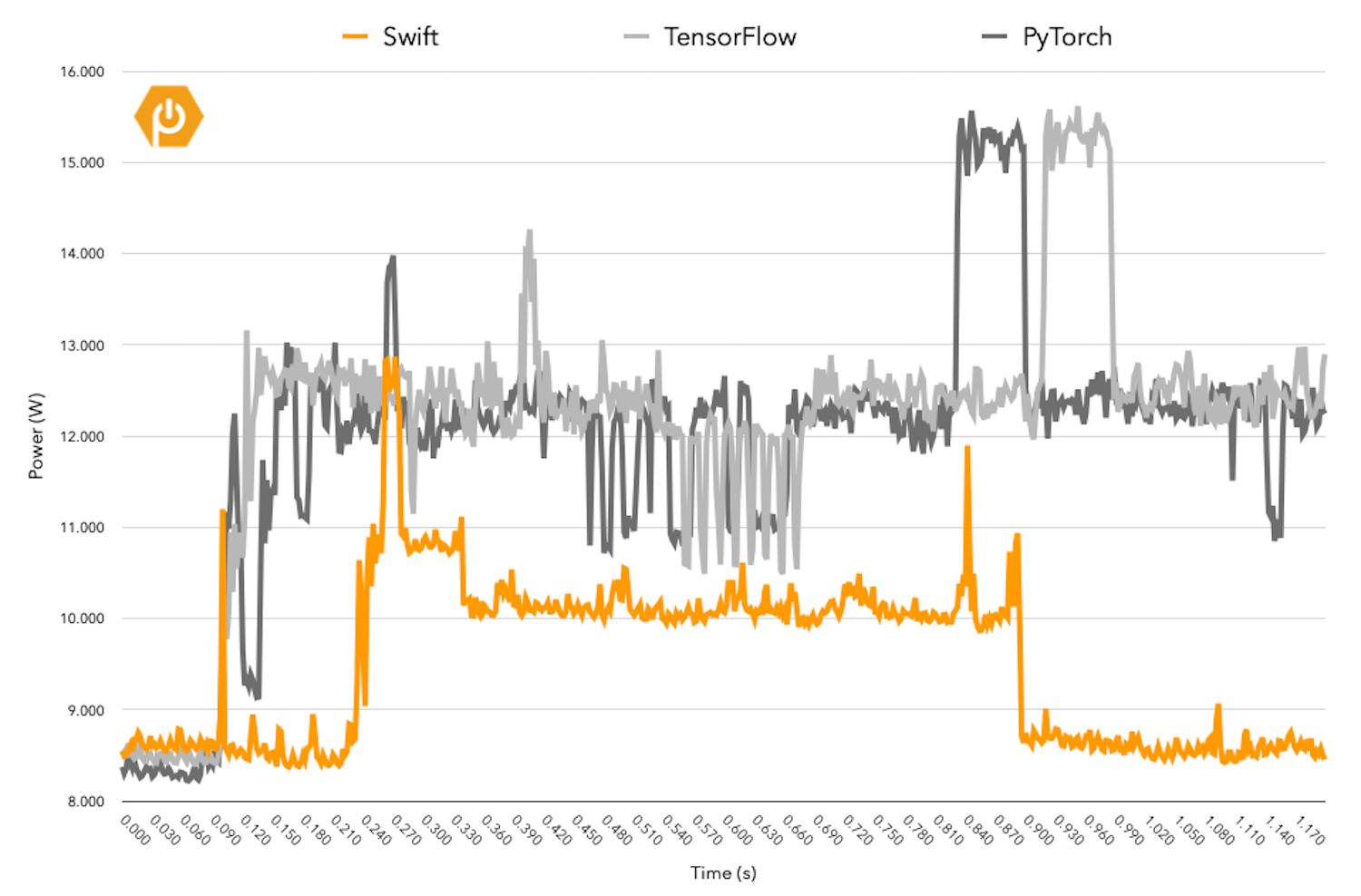

The capture length of the oscilloscope trace is 1.2 seconds. Because two of the three simulations were too long to be captured entirely, we measured the total time of each simulation and used the oscilloscope measurements to determine the average power consumed during execution. We then projected the total power consumed by each program by multiplying the average power level by the length of the test in seconds.

In each test, we ran 5,000 trials with 1,000 timesteps. We threw out the first three trials to ensure both TensorFlow and PyTorch had fully dispatched before we started measuring results. It’s worth noting that in small problem sets, this gives TensorFlow and PyTorch an advantage because dispatch is part of the compute cost. This test only measures the cost of the differentiable backward pass, which is the underlying energy spent on training or inferencing multi-dimensional models.

Differentiable Swift consumed a mere 34 J/GOps, while TensorFlow consumed 33,713 J/GOps and PyTorch 168,245 J/GOps—as benchmarked on NVIDIA’s Jetson Orin processor.

Graph explanation: The graph above captures the level of power consumed in just over one second from the test’s total run-time. The Y-axis depicts average power in Watts; the X-axis is time in seconds. Differentiable Swift's test was completed in 0.62s. TensorFlow and PyTorch required more energy over a longer period—658.85s and 3,340.83s, respectively—to accomplish the same number of computations.

If you’d like to run the experiment for yourself, you can find the test on our GitHub.

Breaking Automatic Differentiation Free from the Deep Learning Prison

From a viewpoint that says “everything we consider foundational in mathematics is just as foundational in computing,” it was a fluke of history that programming languages weren’t built with first-class support for calculus from the start. All languages include the ability to multiply, divide, and exponentiate, but almost none contain knowledge of derivatives. There’s no great reason for excluding calculus as a functionality—it’s just math. The ability to seamlessly merge calculus and code empowers us to tackle previously intractable problems with unprecedented speed and accuracy.

This critique of most programming languages also extends to TensorFlow and PyTorch; they came out of a narrow need to make deep learning easier and faster, but erroneously ignored autodiff. It is an amazing tool, applicable to endless computing situations beyond deep learning. Both frameworks lend themselves to a specific network shape, which, though currently popular, constrains research, innovation, and exploration of AI’s potential. They are also slow for uses outside of deep learning and lack the tools necessary to perform open exploration.

In the current deep learning paradigm, the reverse inferencing enabling back-propagation is confined to the training phase and isn’t used during deployment. Comparatively, fast differentiation can use reverse inferencing in real time during deployment, supporting new kinds of multi-directional inference. When real-life situations can be inferenced in faster than real time, true autonomy becomes possible. Now, a system can “think” fast enough to adjust controls on-demand, and AI can truly empower us to solve poignant problems.

Additionally, Swift’s strong type system and compile-time optimizations enable the compiler to generate highly optimized code, reducing runtime overhead and energy consumption. Swift's expressive syntax and modern features make it easier for developers to write efficient AI algorithms. Efficiency is an overall theme for Swift and is one of the reasons we decided to invest in its development.

Developing the Future of Differentiable Swift

PassiveLogic has built the first differentiable AI solution that enables true autonomy. We chose Swift because it is a modern, safe, and general-purpose language that also supports autodiff. PassiveLogic has focused on advancing differential computing and elevating it for edge and industrial applications, replacing legacy languages like C++.

Key to PassiveLogic’s efficiency is our use of heterogeneous neural networks, which enable a flexible framework where models can be linked together. We’re proud of our ongoing work with the open-source Swift community and appreciate the support of the Swift Core Team. As a Swift language collaborator, our team has submitted thousands of commits and provided 33 patches and feature merges since August 2023.

PassiveLogic believes in a future where AI is accessible to all, without being bound by the constraints of traditional tools.

AI as a Force for Good

The narrative surrounding AI often focuses on its negative environmental impact. However, AI can be a powerful tool for addressing climate change. By making AI more energy-efficient and accessible, we can unlock its potential to foster a more sustainable future.

Our focus on making AI not just innovative, but also efficient and functional at the edge, sets us up for AI to be a partner. By prioritizing AI that is not only innovative but also efficient and functional at the edge, we position AI as a true partner. This approach reduces compute load, lowers energy consumption, and decreases server demand, all while developing systems that can learn and make increasingly efficient and sophisticated sustainability decisions.

PassiveLogic is committed to building AI that is both innovative and environmentally responsible. Our major contributions to Differentiable Swift are a step toward a future where AI not only drives progress but also catalyzes positive change.

By combining cutting-edge technology with a focus on sustainability, PassiveLogic is leading the charge toward a new era of AI.

We’re excited for what’s next. We hope you are too.

Follow us on LinkedIn and sign up for updates on the latest PassiveLogic updates. Learn more about joining the team here.

Author: Troy Harvey

Written in collaboration with: Caroline Genster, Madison Hill

Technical leads: Jeremy Fillingim, Clack Cole, Porter Child, Brandon Schoenfeld, Evan Scullion