Heterogeneous Neural Networks: A New Approach to AI Framework Construction

Building the first platform for generative autonomy requires not only brand new consumer-focused technologies but also the next generation of advanced artificial intelligence (AI). PassiveLogic is building new foundational and sophisticated technologies to advance AI and democratize its use beyond current language and image processing to solve real-world, seemingly intractable problems like reducing building energy consumption and scalable decarbonization.

Buildings are the largest, most complex, and most ubiquitous robots in the world. Considered infrastructural robots, buildings consume 40 percent of the world’s energy. According to the U.S. Department of Energy, optimizing existing buildings’ controlled systems would reduce their energy use by up to 40 percent.

The problem? They’re incredibly complex and can have up to a million I/O points, making it impractical to optimize using current building technology. In order for PassiveLogic to build the first platform for autonomous building control, we were forced to introduce a novel approach to AI. We needed to invent new foundational technology capable of handling the sheer compute and complexity necessary to tackle this difficult problem.

The solution? Using physics-informed neural networks (PINNs) to enable heterogeneous neural networks or HNNs. This industry-first enables an introspectable and composable AI framework that’s not only efficient but also trained on the laws of physics, guaranteeing that it's free from hallucinations, thus ensuring reliable and accurate performance.

So, what exactly is a heterogenous neural network? Let's break it down.

Heterogenous

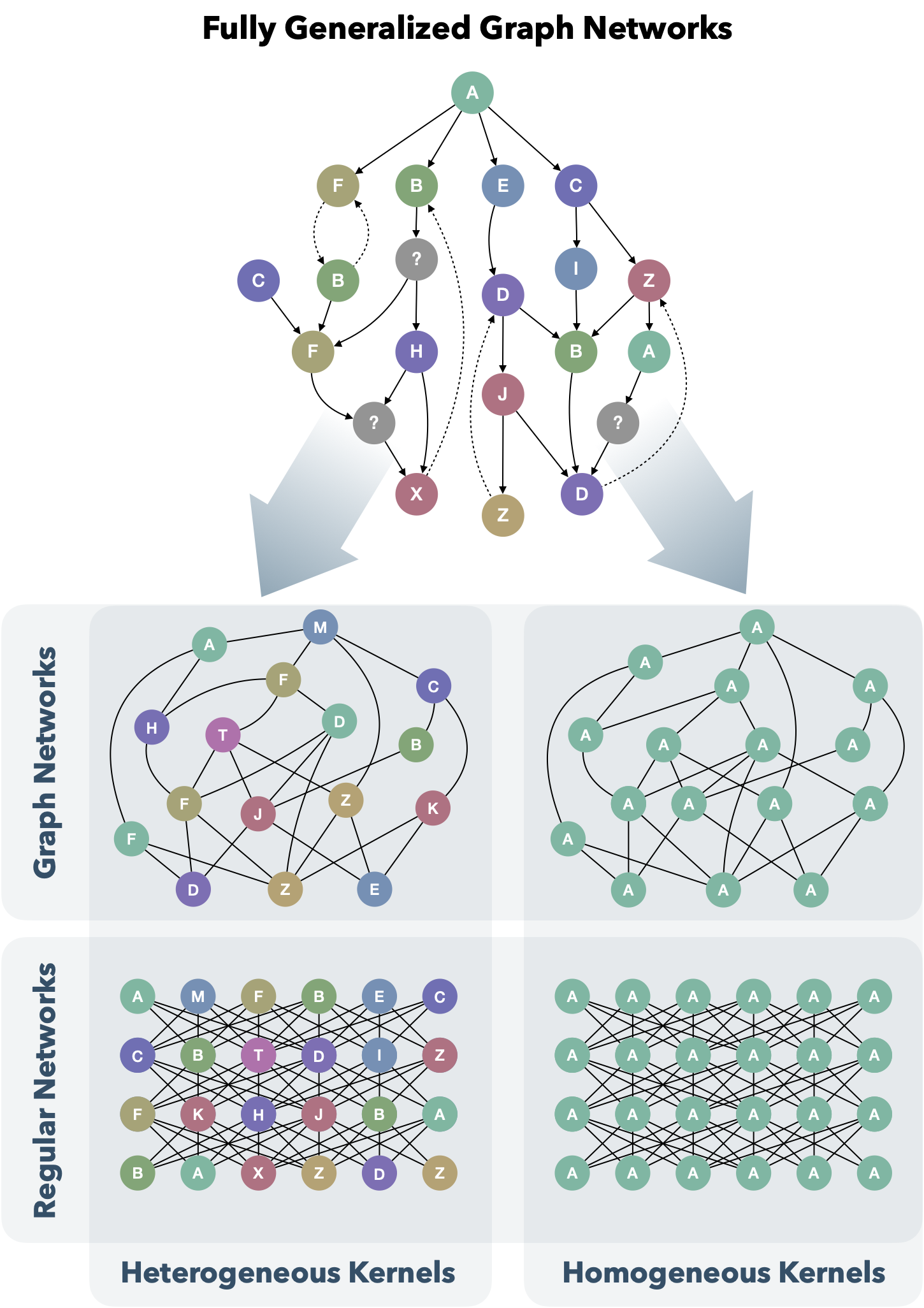

To understand this, it’s helpful to first look at existing neural networks. Today’s leading AI technologies are built on homogeneous neural networks; the neurons are the same type and follow the same connection patterns. They process data uniformly. They’re trained on one medium (text, images, etc.) and only work within that medium.

A heterogeneous neural network (or “net”) is a type of neural network that incorporates different variations of neurons, layers, or architectures within a single network. This heterogeneity can manifest in various ways, depending on the design and objectives of the network.

Attributes of heterogeneous neural networks include:

1. Diverse Neuron Types: These have varying activation functions, properties, or response patterns, unlike traditional neural networks where neurons within a layer usually share the same type and activation function.

2. Mixed Architectures: HNNs can combine different neural network architectures, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and fully connected networks, within the same model.

3. Layer Variety: Different layers might serve different functions and have different structures. For example, early layers might use convolutional layers for feature extraction, while later layers use fully connected layers for decision making.

4. Task-Specific Modules: HNNs might incorporate specialized modules designed for specific subtasks within a larger problem. For instance, in a multi-modal neural network processing text and image data, different parts of the network might be optimized for each data type before combining their outputs.

5. Dynamic and Adaptive Networks: HNNs might include mechanisms for dynamically adapting their structure and neuron types based on the input data or learning progress. This adaptability can lead to more efficient learning and generalization.

Introspectable

With traditional homogenous neural networks, the neurons only take shape as the network is trained on massive amounts of data. Once the model has been sufficiently trained, it can then produce content. However, we have no idea how the neurons are making decisions or what content is included in their outputs.

In other words, with traditional AI models, we can see the input and the output, but we don’t know what is happening inside the model. They're a black box. With this unknowability, these models are inherently unstable and unreliable. This is disadvantageous when building entire economic verticals around them…We need these models to be both versatile and robust, we need to be able to peer inside and understand how they operate, and why they make certain decisions. We need them to be introspectable.

"Introspectable" refers to the capability of an AI system to examine and report on its own internal processes, decision-making mechanisms, and reasoning pathways. This ability allows the AI to provide explanations for its actions and decisions, offering insights into how it arrived at a particular outcome, and even rectifying mistakes.

To be considered introspectable, a model must have:

1. Transparency: The AI can describe how it processes information and makes decisions. This helps users understand the logic behind the AI's outputs.

2. Explainability: The AI can articulate the reasons for its decisions, making it easier for humans to trust and verify its actions.

3. Maintainability: Developers can use introspection to identify and fix issues within the AI system, improving its performance and reliability.

4. Ethics and Accountability: The model can provide justifications for its behavior, which is crucial for addressing ethical concerns and ensuring accountability.

5. User Interaction: Users can query the AI about its processes, enhancing interaction and making the AI more user-friendly.

Composable

"Composable" refers to the ability to create complex systems or functionalities by combining smaller, modular components in a flexible and reusable manner. This concept emphasizes modularity, interoperability, and reusability, allowing different AI models, algorithms, and processes to be easily integrated and reconfigured to achieve various tasks or goals.

To be considered composable, a model must have:

1. Modularity: The system must be built using discrete, self-contained components or modules, each performing a specific function. These modules can be independently developed, tested, and maintained.

2. Interoperability: The components are designed to work together seamlessly, often through standardized interfaces and protocols. This ensures that modules from different sources or with different underlying technologies can interact effectively.

3. Reusability: Components can be reused across different projects or applications, reducing redundancy and development time. Reusable modules help in leveraging existing solutions for new problems.

4. Flexibility: Composable AI systems can be easily reconfigured or extended by adding, removing, or replacing components. This flexibility allows for rapid adaptation to changing requirements or new insights.

5. Scalability: As needs grow, additional components can be integrated into the system without requiring a complete overhaul. This makes it easier to scale AI solutions to handle larger datasets or more complex tasks.

Physics-based

Humans have spent the last 200 years learning the laws of physics; it would be a waste of time and energy to train an LLM to rediscover those laws when we could start with this information embedded in the network. PassiveLogic’s approach opens up a new world of AI applications that are fast, efficient, stable, and secure. The laws of physics do not change, thus there can be no hallucinations when using models based on them. This introduces broad opportunities for autonomous control, from industrial systems to manufacturing to logistics. PassiveLogic is initially employing this technology to enable autonomous buildings, but the possibilities are virtually endless.

Bringing it all together - How PassiveLogic is using this technology

PassiveLogic has adopted a fundamentally different philosophy in the construction of its AI models to enable truly autonomous systems. We’ve spent years in development, securing over 70 issued patents and more than 130 pending patents. Built from the ground up, this technology is a massive departure from the deep-learning techniques used by generative AI companies today.

PassiveLogic’s approach differs from traditional models in several key ways:

- No training data: Because the physics are embedded into the neural network, additional training data is unnecessary. All of the data needed to autonomously manage a system is already in the framework.

- No training time: With embedded physics, there is no separate training period. Instead, models can be composed from components at runtime. The model is then able to fine-tune its control of the system based on real-world sensor data without a need for re-training.

- No overfitting: Unlike traditional models that become unstable when fed information outside of their training data, physics-informed neural networks are well-behaved, as their operation is based on consistent physical principles rather than specific data patterns.

- No hallucinations: PassiveLogic's AI ensures reliable, physics-based decisions, avoiding the hallucinations common in other AI models. This guarantees accuracy and safety in building automation.

- No black boxes: Gone are the indecipherable AI decisions. Because the model’s neural nets have defined behaviors, users are able to dive in and inspect and interpret them. We can now see where decisions are being made and how the network is changing.

This achievement in new deep-learning philosophy powers PassiveLogic’s Hive Autonomous Platform, which is part of PassiveLogic’s ecosystem of autonomous tools to power decarbonization at scale. Heterogeneous neural nets are one of the key technology advancements that enable PassiveLogic’s eight hardware and six software tools. These revolutionary tools are advancing AI for action and democratizing its applications, setting a new standard for building automation and beyond.