Intro to Differentiable Swift, Part 2: Differentiable Swift

So now we understand how to optimize a function with Gradient Descent, as long as we can get the derivative of the function. Great, if all functions had an obvious derivative, we would be able to optimize everything!

But not all functions do have an obvious derivative. Some are very complex, which makes finding the derivative by hand time-consuming.

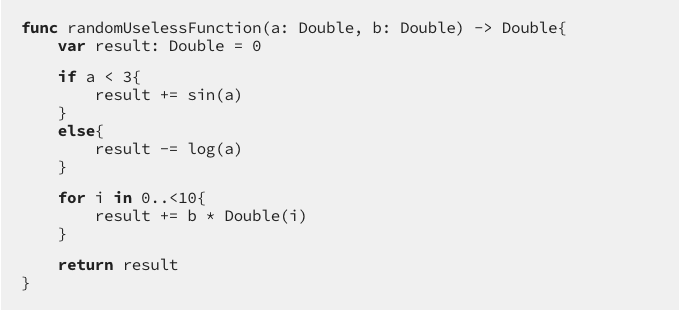

Plus, if we open up our range of possible functions to the world of code, then there are loops, if-else statements, complex objects, functions calling other functions, and much more. Calculating the derivative of a complex code function with pen and paper might take months!

Great news! It’s possible to get derivatives automatically! No pen and paper! You can write a function, say a magic incantation, and get the derivative for free!

In Swift, the magic incantation is:

In Swift, the magic incantation is:

import _Differentiation

Let’s see how it works!

Here’s the familiar f(x) = x²:

func f(x: Double) -> Double{ return x * x}

Let’s get the derivative! Use gradient(at: of:):

var gradientAtThree = gradient(at: 3, of: f)

gradient(at: of:) means, in English, “get the derivative at this point of this function”)

we know the derivative of x² is 2x, so 2(3) should be 6:

print(“gradientAtThree:”, gradientAtThree) // gradientAtThree: 6.0

Success!

Let’s take a step of gradient descent, just for fun: take our gradient, which points uphill, and step in the opposite direction (i.e. negate the gradient). (Then scale this step by 0.1 so it’s not too big):

let stepInXDirection = -gradientAtThree * 0.1 let newInput = 3 + stepInXDirection

after taking this step, the function output should be closer to zero:

print(“oldOutput:”, f(x: 3)) print(“newOutput:”, f(x: newInput)) //oldOutput: 9.0 //newOutput: 5.76

Indeed, it went from 9.0 to 5.76!

Ok, what about something more complex?

Let’s try a random point:

let a: Double = -17 let b: Double = 22 let gradientAtAandB = gradient(at: a, b, of: randomUselessFunction) print(“function output:”, randomUselessFunction(a: a, b: b)) print(“derivatives to a and b at that point :”, gradientAtAandB) //function output : 990.9613974918796 //derivatives to a and b at that point : (-0.2751633, 45.0)

The derivative with respect to “b” is 45.0. Let’s see if stepping “b” by 1 actually moves the output up by 45:

let newB = b + 1 print(“original output:”, randomUselessFunction(a: a, b: b)) print(“output with step:”, randomUselessFunction(a: a, b: newB)) //original output: 990.9613974918796 //output with step: 1035.9613974918796

Yep!

Did you ever think you would be able to do gradient descent on any old bit of code?

The future is now!

In Part 3, we’ll learn a bit more about the AutoDiff API.

Automatic Differentiation in Swift is still in beta. You can download an Xcode toolchain with

import _Differentiableincluded from here (You must use a toolchain under the title “Snapshots -> Trunk Development (main)”).

When the compiler starts giving you errors you don’t recognize, check out this short guide on the less mature aspects of Differentiable Swift. (Automatic Differentiation in Swift has come a long way, but there are still sharp edges. They are slowly disappearing, though!)

Automatic Differentiation in Swift exists thanks to the Differentiable Swift authors (Richard Wei, Dan Zheng, Marc Rasi, Brad Larson et al.) and the Swift Community!

See the latest pull requests involving AutoDiff here.