Breaking the AI Speed Barrier: PassiveLogic’s Differentiable Swift Compiler

The AI Revolution at the Edge

For artificial intelligence, compute speed reigns supreme. The ability to rapidly process information is critical for unlocking the full potential of AI applications at the edge. Leading AI frameworks, while powerful, often struggle to keep up with the demands of real-time computation and even more so on resource-constrained devices.

Our work on the Differentiable Swift toolchain has shattered industry speed benchmarks, performing hundreds of times faster than leading competitors like Google’s TensorFlow and thousands of times faster than Meta’s PyTorch. By leveraging the blazing fast combination of NVIDIA's Jetson Orin GPU and Differentiable Swift, PassiveLogic’s AI models can operate at unprecedented speeds, even on devices like personal computers, smartphones, IoT systems, and small, embedded devices.

The future of edge AI hinges on real-time inferencing, making the fastest general-purpose toolchain for embedded processors not just preferable, but essential. While speed is an advantage in all AI frameworks, it’s particularly critical for our heterogeneous AI models, which perform automatic differentiation during runtime, not just training. Because these models are designed to continuously incorporate new data, the enhanced performance directly translates to a learning rate hundreds of times faster than before. They then process new information, adjust their decisions, and optimize output delivery that better aligns with the intended goals. True autonomy requires real-time processing at the edge.

Record-Setting Compute Speed

We benchmarked our recently optimized Differentiable Swift toolchain against industry standards TensorFlow and PyTorch. Because Differentiable Swift isn’t opinionated about the form of computation, it can performantly handle heterogeneous, scalar calculations. Popular frameworks’ narrow focus on massive homogeneous neural net matrix multiplications inhibits their ability to execute AI calculations that do not perfectly fit their deep-learning paradigm.

When comparing the time it takes to calculate the gradient at 100,000 timesteps (the amount of state updates over the course of the simulation), Differentiable Swift performed 683x faster than TensorFlow and 3,610x faster than PyTorch. Using functional operations for comparison, Swift clocked 42,974,432 ops/sec, compared to TensorFlow at 62,845 ops/sec, and PyTorch at 11,902 ops/sec.

Since last year’s benchmark, Differentiable Swift has increased its lead by over 740%. These gains are a testament to PassiveLogic’s ongoing advancements in Differentiable Swift and its ability to push the boundaries of AI computation.

But speed is just one piece of the puzzle. The real power of Differentiable Swift lies in its ability to unlock new possibilities for AI applications. By enabling AI models to learn and adapt in real time, Differentiable Swift opens the door to a wide range of innovative use cases.

The Methodology

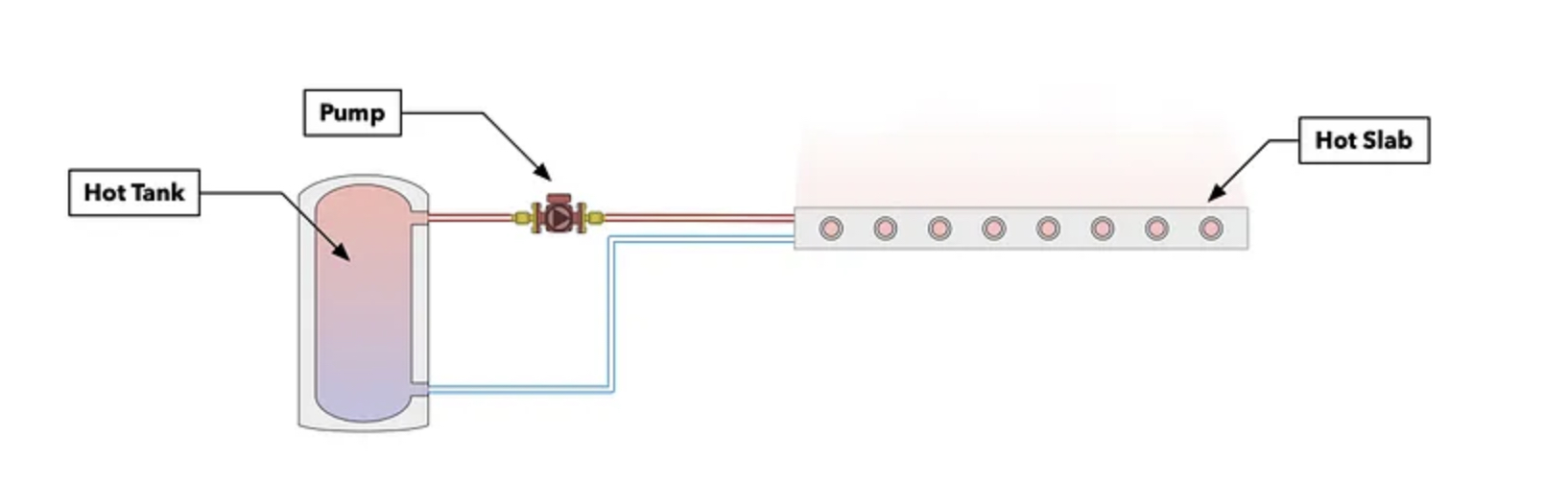

To measure performance, we utilized the simulation of a thermal system using automatic differentiation to calculate gradients. The thermal system is comprised of a concrete slab, a tube, a tank, and quanta that run throughout. The heat transfer process is modeled over a chosen number of timesteps, and the entire simulation is repeated over a number of trials. The time it takes to run each simulation's forward and gradient passes within a trial is recorded and averaged as the core measurement for comparison between Differentiable Swift, PyTorch, and TensorFlow.

Image description: A schematic of the equipment system we are modeling for this benchmark, including a tank, a flat radiator slab, and a pipe connecting them.

The benchmark accepts the number of trials and timesteps as parameters, allowing for experimentation on how increasing numbers of trials and timesteps affect simulation speeds. While timesteps determine the length of a simulation, trials determine how many times we repeat each simulation. Adjusting the number of timesteps affects the overall computational load, while increasing trials increases the sample size, giving a more consistent average.

But how does this benchmark compare to last Fall’s benchmark announcement?

For the most part, the simulation remains the same, with only a few adjustments. Last year, the run was executed once at 20 timesteps over 30 trials. This time, we've run multiple rounds, scaling in each dimension—trials and timesteps—up to 100k. This run gives us a wider view of the performance differences between each framework.

Another notable difference between the runs is that for larger timestep values, we encountered some inconsistencies and performance warnings from TensorFlow. After resolving those issues, TensorFlow performance improved for both gradient speed and memory usage.

Interestingly, when we attempted to run 1,000,000 timesteps, Swift and TensorFlow were able to finish the test. However, PyTorch was unable to finish because its process was terminated by the kernel when it ran out of memory. We will delve deeper into the importance of memory utilization in the coming weeks.

The NVIDIA Jetson Orin processor—the fastest and most popular processor for mobile and edge AI computing available—allowed us to run these computations as fast as currently possible. I often say, “You’re only as fast as your fastest processor,” meaning you can’t just throw more servers at these models to improve their compute time. This allows the model to inference in faster-than-real-time while also being efficient enough to fit inside mobile and small edge devices. Improving processing power while simultaneously shrinking how much real estate is needed to support that processing enables AI to be leveraged in novel applications. This will expand what’s possible for deep learning and transform AI innovation around the world.

Additional details about the test can be found on our GitHub.

Deep Learning, Reimagined

Today’s understanding of “deep learning” is largely limited to image generators and LLMs. But at PassiveLogic, we’re working to advance deep learning beyond its current limits, beyond just static pre-trained homogenous neural nets? Or is true deep learning the ability to train any kind of model you can imagine, with any number of parameters? To us, true deep learning is about the depth of its variables.

In our recent article on Differentiable Swift’s latest energy savings, we outlined the questions we’re solving with our extensive work on Differentiable Swift:

- What if we could enable heterogeneous, fully generalized graph networks—no matter how complex or general purpose?

- What if AI and application code could merge into a single performant systems language?

- What if autodiff wasn’t just for training, but fast enough for inferencing models in arbitrary dimensions?

- What if model learning needed so little energy that it could happen at the edge, right in runtime battery-powered applications like robotics?

In the current deep learning paradigm, the the backpropagation enabling reverse inferencing is confined to the training phase. It’s locked away once the model is trained and isn’t used again after deployment. That’s a loss for all users who would benefit from models learning on the job. In contrast, Differentiable Swift employs ultra-fast differentiation that can use reverse inferencing in real time both in training AND during deployment, supporting new kinds of multi-directional inference. When real-life situations can leverage these abilities, true autonomy becomes possible.

PassiveLogic’s extensive work on Differentiable Swift is expanding applications for novel AI models, moving beyond the current deep learning orthodoxy. These contributions open doors to countless new AI methodologies where experimentation was previously difficult—with uses in everything from physics, economics, and philosophy to engineering, autonomous systems, and user experience. While current deep learning has enabled a very specific approach to AI, there is so much more to explore.

At the heart of Differentiable Swift is a thorough understanding of deep learning. Unlike traditional approaches that rely on homogeneous neural networks, Differentiable Swift empowers developers to create highly customized models tailored to specific tasks. This flexibility is essential for addressing the complexities of real-world problems.

Novel AI Applications

Differentiable Swift's versatility extends beyond traditional deep learning, making it the first truly general-purpose AI compiler. It can be applied to a variety of AI techniques, including:

- Heterogeneous compute: Combining different types of processors for optimal performance.

- Ontological AI: Using knowledge graphs to represent and reason about complex information.

- Graph neural networks: Analyzing and understanding relationships within data structures.

- Continual learning: Enabling AI models to learn from new data without forgetting previously acquired knowledge.

Edge compute is critical to an autonomous future; no system can be truly autonomous if it must always be plugged in or requires constant interaction with the cloud to function. Data centers are not a solution for autonomy in embedded applications like robots and logistics. Autonomous systems must optimize energy efficiency, speed, and memory utilization. Achieving a balance among all three supports real-time decision-making. Our unprecedented speed translates to significant energy savings and reduced operational costs. PassiveLogic’s Differentiable Swift toolchain, in combination with NVIDIA’s Jetson Orin processor, strikes this balance to deliver superior compute performance for heterogenous AI models.

The Future of AI

PassiveLogic's Differentiable Swift compiler is a game-changer. It not only sets a new standard for AI speed but also paves the way for a future where intelligent systems can seamlessly integrate into our daily lives. We’re committed to building AI that is both innovative and environmentally responsible. Our major contributions to Differentiable Swift are a step toward a future where AI not only drives progress but also catalyzes positive change.

By unlocking the full potential of AI at the edge, Differentiable Swift is driving a revolution that will shape the world for generations to come.

Follow us on LinkedIn and sign up for PassiveLogic’s email updates. Learn more about joining the team here.

Author: Troy Harvey

Subject matter expert: Clack Cole

Written in collaboration with: Caroline Genster, Madison Hill